I teach physics via explicit instruction, right?

My physics classes generally follow an ‘I do', ‘You do' format. We do weekly tests and, insofar as I can manage it, everything that I ask students to do is highly structured and knowledge focussed. I think it's fair to label this ‘explicit instruction' and, as such, I've often felt a sense of validation when reading articles like this one by Paul Kirschner which claims that PISA data demonstrate that inquiry-based instruction is no match for explicit. Kirschner also suggests that, despite clear evidence, PISA doesn't want to accept its own conclusions:

“Although this distinction is very clearly shown in the figure, the PISA report is very hesitant with regards to interpreting these results. It seems as if the authors can’t bear the thought to dismiss the enquiry-learning ideology. Pathetic and unfair!”

But recently I did some reading that rocked my explicit instruction boat. The reading was in preparation for an Education Research Reading Room podcast episode with Sharon Chen in Taiwan. Entitled Inquiry Teaching and Learning: Forms, Approaches, and Embedded Views Within and Across Cultures, the paper compared inquiry-based instruction in German, Australian, and Taiwanese primary science classrooms.

All was going just fine until I came across the following sentence:

‘We could argue inquiry learning occurred not only in students’ peer dialogues and in teacher elicited dialogues with constructive activities, but in teacher guided instructional dialogues as well…’ (p. 118)

What the heck? ‘Teacher lead inquiry-based instruction'… I thought that was an oxymoron. Does that mean that I teach by inquiry?

I decided to ask my students. First, I gave them two definitions:

Explicit Instruction

- The teacher decides the learning intentions and success criteria, makes them clear to the students, demonstrates them by modeling, evaluates if students understand what they have been told by checking for understanding, and re-tells them what they have been told by tying it all together with closure (adapted from Hattie, 2009, p. 206)

Inquiry based Instruction

- The teacher provides opportunities for students to hypothesize, to explain, to interpret, and to clarify ideas; draws upon students’ interests and engages them in activities that support the building of their knowledge; and uses structured questions and representations (diagrams, animations, live demonstrations, experiments) to assist students to learn. (adapted from Chen & Tytler, 2017, p. 118).

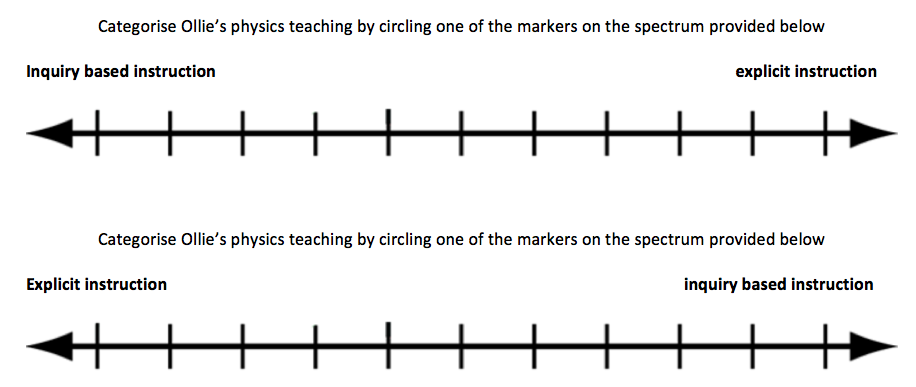

Then I gave each student one of these (each student given one spectrum, axes reversed every other spectrum to cancel out any erroneous associations of ‘right is better' or the opposite).

The verdict? This is a 10 point scale and (after accounting for the flipped axes) the average rating of my physics teaching was only 0.56 points to the explicit side of centre! I'm a fence sitter (or my students are?). Fitting, seeing as the spectrum looks like a fence. I digress.

Both my teaching and the definitions were obviously not as clear cut as I had thought. It was time to go definition hunting.

First Stop, PISA

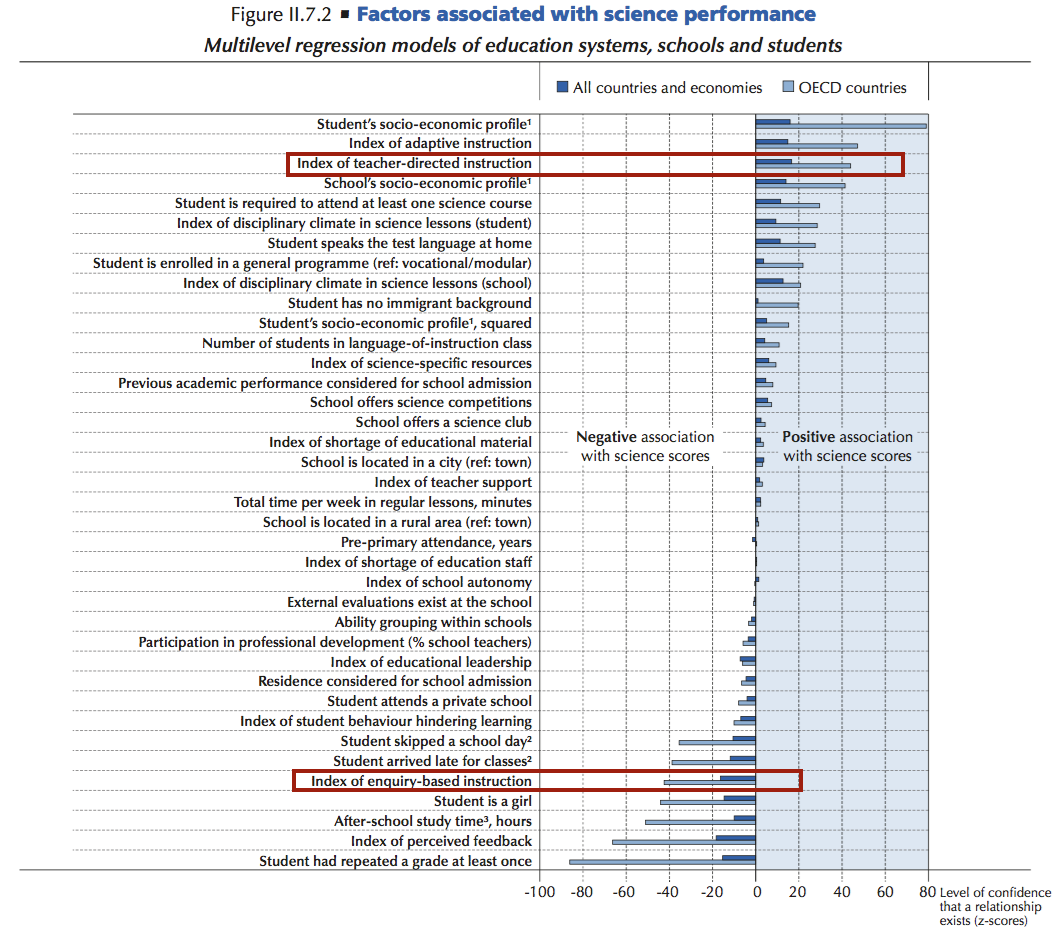

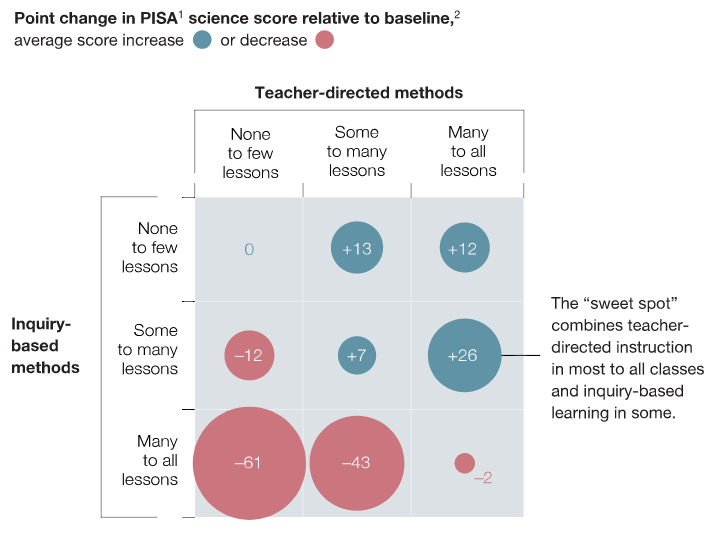

All the while I had Kirschner's article firmly in my mind, so PISA seemed like the logical place to go to explore definitions. Below is the image (red boxes in Kirschner's article) that he featured as evidence for the conclusion that inquiry-based instruction is baloney (image from 2015 PISA results, pg. 228).

So I clearly needed to find out exactly what these ‘teacher-directed science instruction' and ‘inquiry-based science instruction' were. The following comes from page 63:

PISA asked students how frequently (“never or almost never”, “some lessons”, “many lessons” or “every lesson or almost every lesson”) the following events happen in their science lessons:

Then, for Teacher-directed (often thought of as synonymous with explicit instruction, i.e., ‘sage on stage' rather than ‘guide on side'. (pg. 63)

“The teacher explains scientific ideas”; “A whole class discussion takes place with the teacher”; “The teacher discusses our questions”; and “The teacher demonstrates an idea”.

For inquiry-based instruction…(pg. 69)

“Students are given opportunities to explain their ideas”; “Students spend time in the laboratory doing practical experiments”; “Students are required to argue about science questions”; “Students are asked to draw conclusions from an experiment they have conducted”; “The teacher explains how a science idea can be applied to a number of different phenomena”; “Students are allowed to design their own experiments”; “There is a class debate about investigations”; “The teacher clearly explains the relevance of science concepts to our lives”; and “Students are asked to do an investigation to test ideas”.

Looking at these broadly, the main difference between them is the kind of distinction that the layperson would hold, and the distinction that I held prior to Chen and Tytler's paper: inquiry-based is more student-directed, which is in contrast to explicit being more teacher-directed. Additionally, inquiry-based instruction is more frequently related to ‘our lives'.

This definition wasn't as complex as I'd hoped. Time to dig a deeper.

Stop 2, Furtak's Meta-analysis

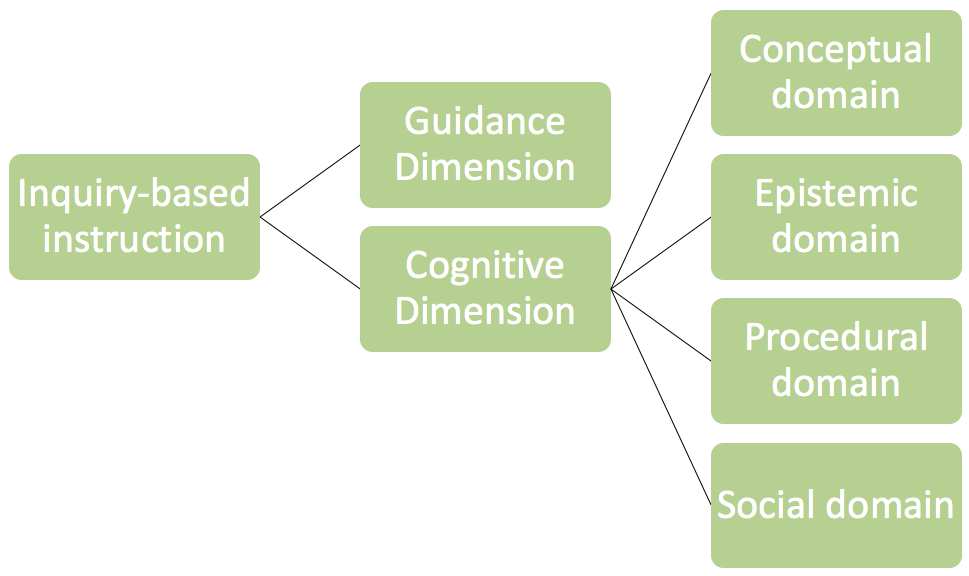

In Chen and Tytler's paper they referenced Erin Furtak's 2012 Experimental and quasi-experimental studies of inquiry-based science teaching: A meta-analysis. I jumped into this paper and found a much more nuanced approach. Furtak presented two dimensions of inquiry-learning, cognitive and guidance, with each split into different domains. I've summarised the framework in the following image:

Here's a basic summary of each dimension and domain

- Guidance Dimension

- This is what we're all familiar with and Furtak explains it with the diagram below, with ‘Teacher-guided inquiry' added in the middle.

- Cognitive Dimension, made up of

- Conceptual domain

- Furnishing students with an understanding of all of the science ‘concepts' of science. This can be thought of broadly as knowledge and relationships between various bits of information.

- Epistemic domain

- Exploring with students, ‘How do scientists know when something is a fact or not'

- Procedural domain

- Exploring with students, ‘What kind of things do scientists do to help them find out things about the world?'

- Social domain

- Exploring with students, ‘How do scientists communicate with each other, and with the wider world, in order to advance and communicate science?'

- Conceptual domain

Fundamentally, Futak and colleagues argue that most of the time, when discussing ‘inquiry', we're talking about what instruction looks like in the classroom, i.e., the guidance dimension. What she suggests matters more is what's actually going on in students' heads (the cognitive dimension). This is a classic surface vs. deeper structure error where we're making assessments of what's going on based on what we see on the surface instead of what's going on underneath (for more on this, see Chi, Feltovitch, and Glaser on how expert and novice physicists classify questions differently based upon either surface or structure features).

We're so good at making this mistake, in fact, that of the 37 papers that Furtak and colleagues examined, they found that:

“many of the experimental studies performed in [the decade during which inquiry was the main focus of science education reform, 1996-2006,] did not actually study inquiry-based teaching and learning per se, but rather contrasted different forms of instructional scaffolds that did not substantively change the ways in which students engaged in the domains of inquiry“ (pg. 323)

Said another way, from a cognitive perspective, in 13 out of the 37 papers (that's 35%), there was no difference between the way that the control group (unchanged instruction) and the ‘inquiry' group treated the content!!!

Does that mean that when people argue about ‘inquiry' vs. ‘explicit' instruction, 35% of the time they're not actually arguing about any difference at all?

Maybe.

After sifting through the (limited number of remaining) studies, Furtak and colleagues made the following suggestion:

“the evidence from these studies suggests that teacher-led inquiry lessons have a larger effect on student learning than those that are student led.”

So, turns out the oxymoron of ‘teacher-lead inquiry' actually turns out to be a pretty effective method of instruction. Go figure.

What I took from this paper was that the ‘inquiry' in ‘inquiry-based instruction' isn't actually about who's leading the class, the students or the teachers, it's about what's going on in students' heads. As Willingham aptly puts it, ‘Review each lesson plan in terms of what the student is likely to think about. This sentence may represent the most general and useful idea that cognitive psychology can offer teachers' (2009, p. 61).

More evidence?

This finding, that ‘teacher-led inquiry' is the most effective method, is somewhat corroborated by recent research by McKinsey&Company that suggests that learning is maximised when instruction ‘combines teacher-directed instruction in most to all classes and inquiry-based learning in some.' This research, interestingly, also utilised PISA data, therefore also using the PISA definitions.

To me this also relates to the ‘expertise reversal effect' from cognitive science (see Kalyuga, Ayres, Chandler, and Sweller, 2003). That is, as learners gain expertise in a field, more guided forms of instruction (such as explicit instruction) become less effective, and are surpassed in effectiveness by less guided forms of instruction. I spoke to Professor Andrew Martin about this in a recent podcast where we explored the more and less guided spectrum of instruction in a heap of detail.

And here's the kicker: If we look back up to the Kirschner-referenced PISA image (the one with the red boxes), we see that the thing sitting directly above the ‘Teacher-directed' criteria is one entitled ‘Adaptive instruction', which PISA defined as follows (p. 66)

“The teacher adapts the lesson to my class’s needs and knowledge”; “The teacher provides individual help when a student has difficulties understanding a topic or task”; and “The teacher changes the structure of the lesson on a topic that most students find difficult to understand”.

This sounds a lot like ascertaining a students' level of expertise in a given domain then providing support accordingly, i.e., more or less guided (why haven't we been talking about this definition more?!?)

Reflecting back on my own classroom

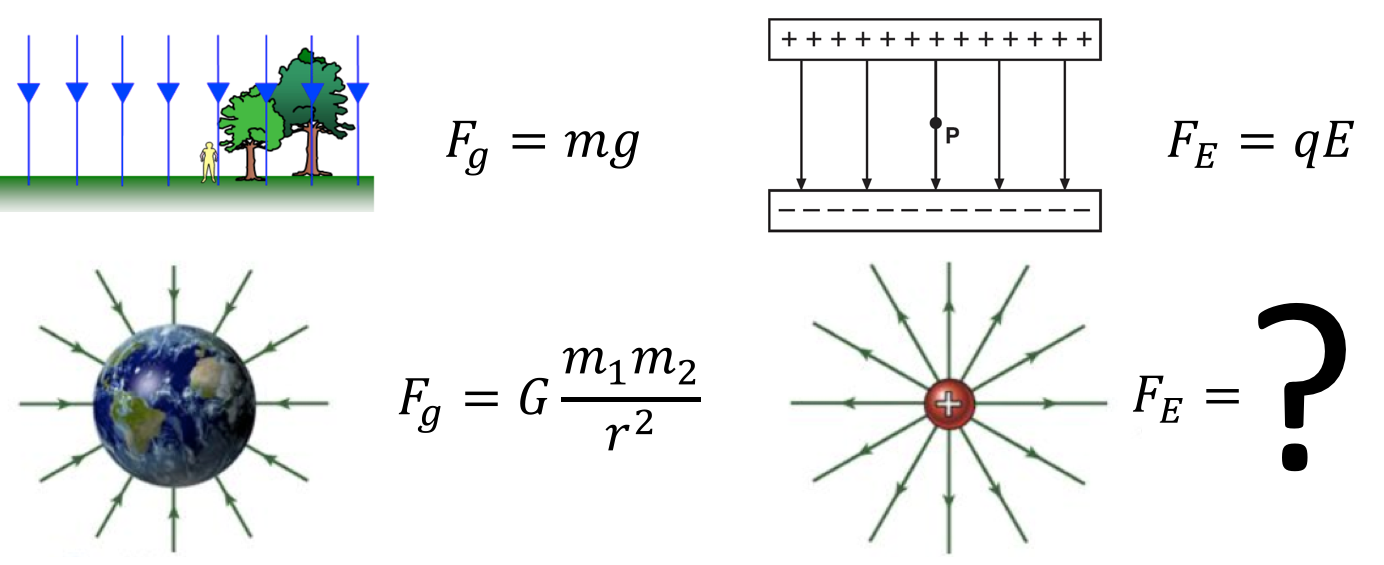

As I thought about my physics classes' rating of my teaching, I re-visited my lesson plans to try to see if any of what I do would fit into the category of ‘teacher-led inquiry'? Maybe this does:

An image that I leave students to think about and discuss to try to work out the answer to (but not for more than about 2-3 mins).

Maybe some of these questions do too:

- What falls faster, a feather or a bowling ball?

- How do rockets fly in space?

- Based upon this analysis, how would one calculate the impulse from a force vs. time graph?(Which followed on from a discussion of: ‘Use dimensional analysis to determine how impulse is related to force!’)

- What is energy?

- If you want to drive your car to the shops… where does the energy come from (and through which forms does it change)?

But really… who cares?

More than anything, this (unfinished) exploration into distinction between inquiry and explicit instruction has left me questioning to what degree such ‘definitions' are even helpful in discussions about teaching and learning. What do we even achieve by taking sides, or trying to put our praxis into a box anyway?

Instead of asking each other ‘Do you teach by inquiry?' or ‘Are you trad or prog?', I think that a much more helpful set of questions could be something like:

- When you did that activity in class today, what did you hope that students would be thinking about?

- What did you hope the students could do by the end of today's lesson that they couldn't do at the start?

- Why did you choose to ask that question/call on that student at that time?

- How did your assessment of your students' expertise in this domain influence your choice of activities today? (except in less words)

- What do you think are the strengths and weaknesses of the way that you chose to check for understanding at the end of today's class?

- When are you going to re-visit this content, and how are you going to re-visit it, to ensure that students retain the key points?

- and, following any of these, ‘Why?', ‘Why?', then, ‘Why?'.

Epilogue: I watched some ‘teacher-led inquiry' science lessons in Taiwan…

Whilst I was in Taiwan recently I went and watched the teacher, Pauline, who was featured in Chen and Tytler's paper as an example of inqury-based science teaching in Taiwan (podcast here). I watched her run four ‘teacher-lead inquiry' based lessons on solids, liquids, and gasses with year 5 and 6 students… and it was FANTASTIC! Maybe I'll share a blog post about it some day, but for now, I thought I'd share the following brief notes.

Basic lesson format: 1. Teacher-led re-cap of content covered previously (which related directly to the experiment), 2. Teacher tells students how to do an experiment, 3. Teacher tells students exactly what she wants students to look at and think about whilst the experiment is taking place (what changes between before and after the heating of the popcorn/chocolate/egg?), 4. Students do experiment, 5. Teacher runs a class discussion about what happened, and why! 6. Students eat the food.

This was all in 40 minutes mind you, and the classroom was left spotless by the students too, even though they were making popcorn, melting chocolate, and frying eggs atop (oldschool) bunsen burners!

Students were engaged, they were expressing their ideas, they were using subject-specific vocabulary and making connections to prior-learning. Pauline had high expectations of them and was rigorously questioning them on the concepts and terminology that she wanted them to be learning.

And whilst discussing and reflecting upon these 4 lessons with Pauline, we barely even used the word ‘inquiry' ; )

Edit: I enjoyed Greg Ashman's critique of this post here, to which I replied here.

Fascinating post Ollie. Don’t stop questioning!

Thanks for alerting me to your post Ollie; I really enjoyed the read and encourage you to keep thinking and challenging. Knowledge is never completely fixed or perfect, especially in relation to a dynamic activity like teaching and when our students are diverse and unpredictable. I really like what you’ve pointed out about teacher-led inquiry as this is very similar to the points @cpezaro and I made in a PD some years ago. It shouldn’t be an either/or. Surely we are skilled enough to draw on the strengths of both?

This is a fascinating post, both for what you found and your reflections on your own teaching. A couple of thoughts.

Another interesting paper is this Mayer’s ‘Should there be a three-strikes rule against pure discovery learning?’ Throughout, what’s clear is that Mayer is critiquing ‘pure’ discovery learning, i.e. giving students pretty much no help at all. It’s clear from the paper that a) pure discovery learning has had its advocates and that b) it is an extremely ineffective way for students to gain knowledge and skills.

In history teaching in England, I think we’re – on average – somewhere near this ‘guided discovery’ point. The teacher sets up enquiries (so-called because they’re shaped around questions to answer, not for the kind of teaching), objectives, assessments, organises resources, works out where they will intervene, the final task… but then within this carefully-elaborated structure, students do a chunk of discovering… you might model how to examine a source of evidence then ask students to use the next one. This balances the thrill (and autonomy) of discovery with a structure to ensure it’s productive (particularly with the teacher’s ongoing interventions to capitalise on what students have learned). Adapting those PISA headings to history, that strikes me as being included in both. I suspect most teachers would say ‘Of course I do some modelling’ and ‘Of course I try to get students thinking for themselves.’

But, finally, I think there is still a meaningful distinction to be made which your questions get towards nicely. I definitely spent a lot of my early career leaving far too much of the structuring I’ve described above to my students (choice of content, methods, autonomy) and it really didn’t help – because they were novices. So I think maybe the key question would be ‘How is this lesson tailored towards the needs of a novice (or an expert) learner?”

Great post. I think those PISA questions were not very well chosen. It’s interesting to look at the level of individual questions rather than PISAs aggregate into ‘enquiry’ and ‘teacher directed’. I think, as scientists, there has been less of a distinction in our subject than in some others because the precision of both content and structure that is required to answer a science question has never really allowed teachers to try low levels of guidance, even at the height of Nuffield, or How Science Works. There has certainly been a sense of encouraging children to make discoveries but almost always in a tightly scaffolded way that may obscure things temporarily but leads pretty inevitably to the right idea.

Having read the article a couple of days ago, I have found my self coming back to the example question about field strengths. I’m not sure if I like it or not. I just can’t decide and it is making be think about questions which is great. I guess what I would like to know is what the question was hoping to do? Was it aimed at them learning the equation or understanding the structure of equations in general? I don’t think it can (or should?) be used for both as the prerequisites for both are different.

Great question! Where this comes in the teaching sequence is: after I’ve shared with students the analogy between mass and charge; and gravitational field strength and electric field strength. Then I just show up this picture, say, ‘what do you think?’ then walk to the back of the room and wait there for a minute or so prior to walking back to the front and asking ‘anyone got any ideas?’. Each time I’ve done it I’ve had at least one student correctly guess the formula (though they usually, logically, guess the constant is ‘E’ rather than ‘K’!) So yeah, it’s building a link between their prior knowledge (on gravitational fields and forces) and this new knowledge I’m trying to establish. Giving them an option to work it out for themselves, without wasting too much time as well. Hope that helps.

I appreciate this view of “teacher-guided inquiry”, I enjoyed and learned from the reading. I believe that the distinction between “Inquiry-based” and “fully-guided” learning IS important to deeply understand the benefits of each method for students in different stages of learning. However, I agree with your conclusion that we should constantly ask WHY we teach in certain ways. Just as we don’t want inquiry-based learning to become a “teaching recipe”, we don’t “fully guided learning” to become one.